AWS Cluster Autoscaler

Palette supports auto scaling for Amazon Elastic Kubernetes Service (EKS) host clusters through the AWS Cluster Autoscaler pack.

The Cluster Autoscaler pack monitors the cluster workload and utilizes Amazon EC2 Auto Scaling Groups to dynamically provision or shut down nodes, maximizing the cluster's performance and making it more resilient to failures. The Kubernetes clusters are resized under the following conditions:

-

Scale-up: The Cluster Autoscaler triggers a scale-up operation if insufficient cluster resources lead to multiple pod failures. The pods become eligible for scheduling on the new nodes. The Cluster Autoscaler checks for pod failures every 30 seconds and schedules impacted pods on new nodes. Scaling up will not happen when the given pods have node affinity.

-

Scale-down: The Cluster Autoscaler triggers a scale-down operation if nodes are underutilized for ten continuous minutes, and their pods are eligible for rescheduling on other available nodes. The node utilization threshold for scaling down a node defaults to 50% of the node's capacity. The Cluster Autoscaler calculates the node utilization threshold based on CPU and memory utilization. In scenarios where the node is underutilized, the Cluster Autoscaler migrates the pods from underutilized nodes to other available nodes and then shuts down the underutilized nodes.

Versions Supported

- 1.29.x

- 1.28.x

- 1.27.x

- 1.26.x

- 1.22.x

- Deprecated

Prerequisites

-

An EKS host cluster with Kubernetes 1.24.x or higher.

-

Permission to create an IAM policy in the AWS account you use with Palette.

Existing cluster profiles that use the manifest-based Cluster Autoscaler pack version 1.28.x or earlier cannot be upgraded directly to version 1.29.x of the pack based on Helm. To use version 1.29.x, you must first remove the old version of the pack from the cluster profile and then add the new one.

Parameters

| Parameter | Description | Default Value | Required |

|---|---|---|---|

charts.cluster-autoscaler.awsRegion | The AWS region where the resources will be deployed. | us-east-1 | Yes |

charts.cluster-autoscaler.autoDiscovery.clusterName | This parameter enables autodiscovery within the EKS host cluster. | {{ .spectro.system.cluster.name }} | Yes |

charts.cluster-autoscaler.cloudProvider | The cloud provider where the autoscaler will be deployed. | aws | Yes |

charts.cluster-autoscaler.extraArgs.expander | Indicates which Auto Scaling Group (ASG) to expand. Options are random, most-pods, and least-waste. random scales up a random ASG. most-pods scales up the ASG that will schedule the most amount of pods. least-waste scales up the ASG that will waste the least amount of CPU/MEM resources. | least-waste | Yes |

Usage

The Helm-based Cluster Autoscaler pack is available for Amazon EKS host clusters. To deploy the pack, you must first define an IAM policy in the AWS account associated with Palette. This policy allows the Cluster Autoscaler to scale the cluster's node groups.

Use the following steps to create the IAM policy and deploy the Cluster Autoscaler pack.

-

In AWS, create a new IAM policy using the snippet below and give it a name, for example, PaletteEKSClusterAutoscaler. Refer to the Creating IAM policies guide for instructions.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeTags",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeImages",

"ec2:GetInstanceTypesFromInstanceRequirements",

"eks:DescribeNodegroup"

],

"Resource": ["*"]

}

]

} -

Copy the IAM policy Amazon Resource Name (ARN). Your policy ARN should be similar to

arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler. -

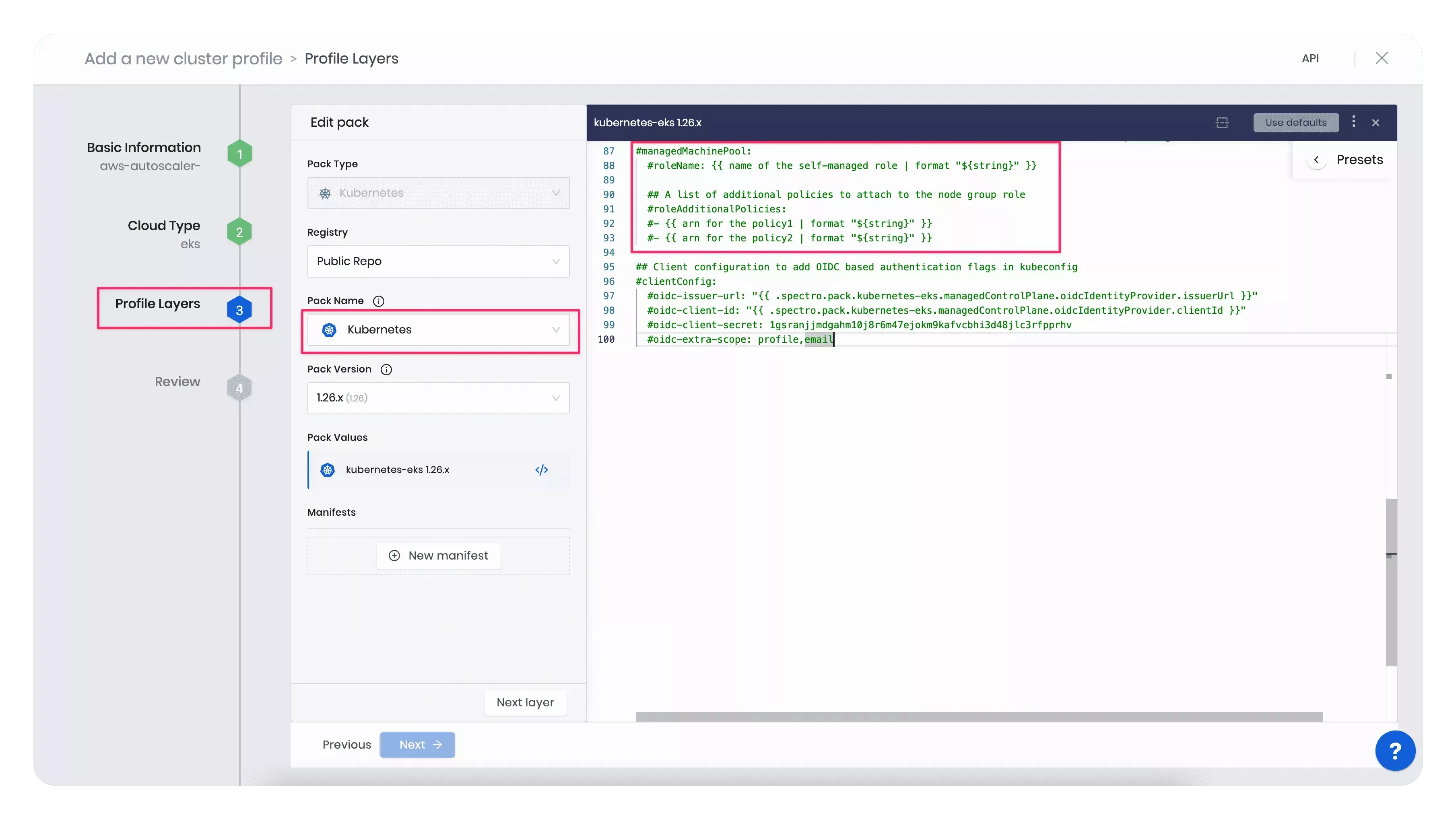

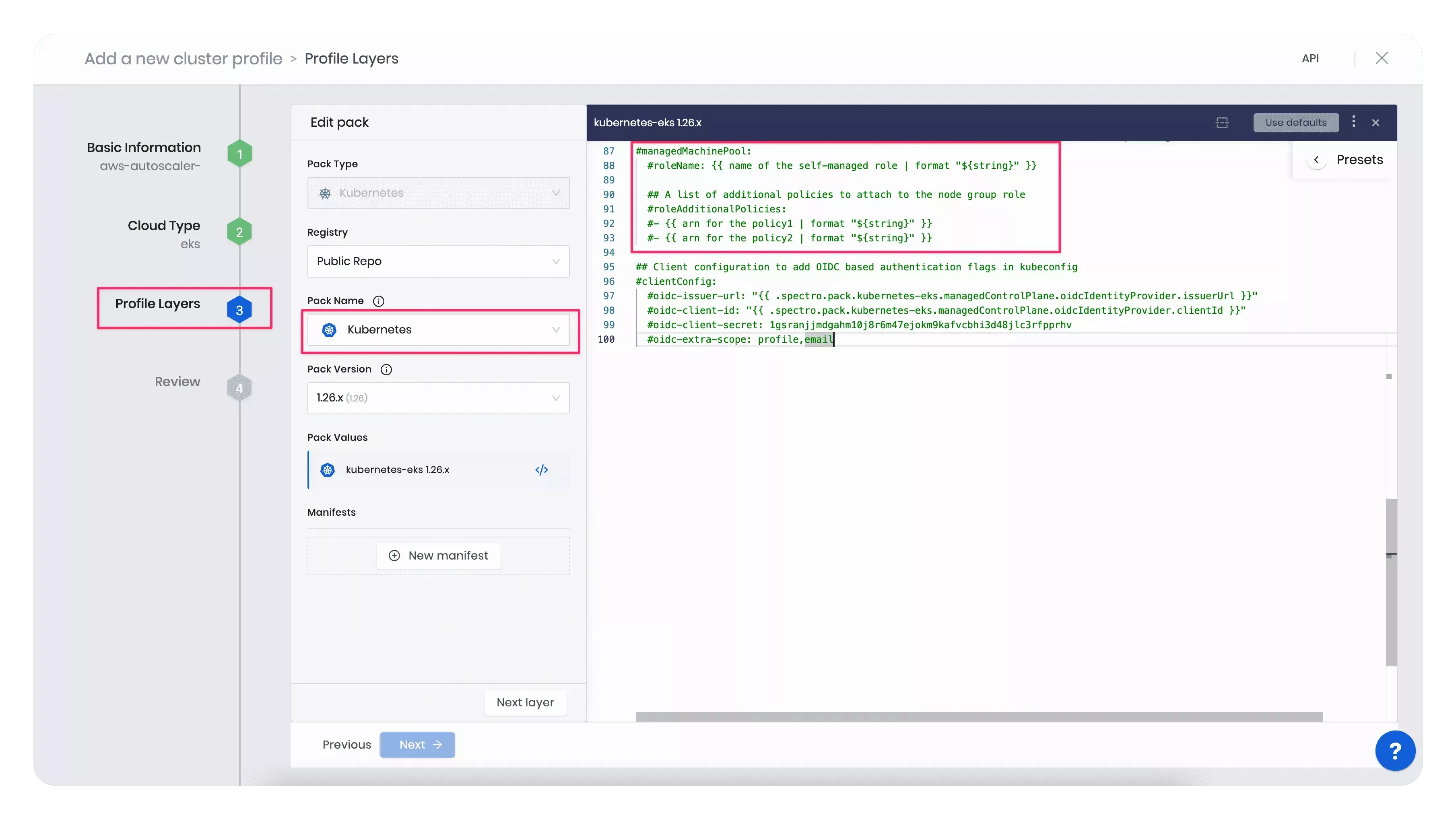

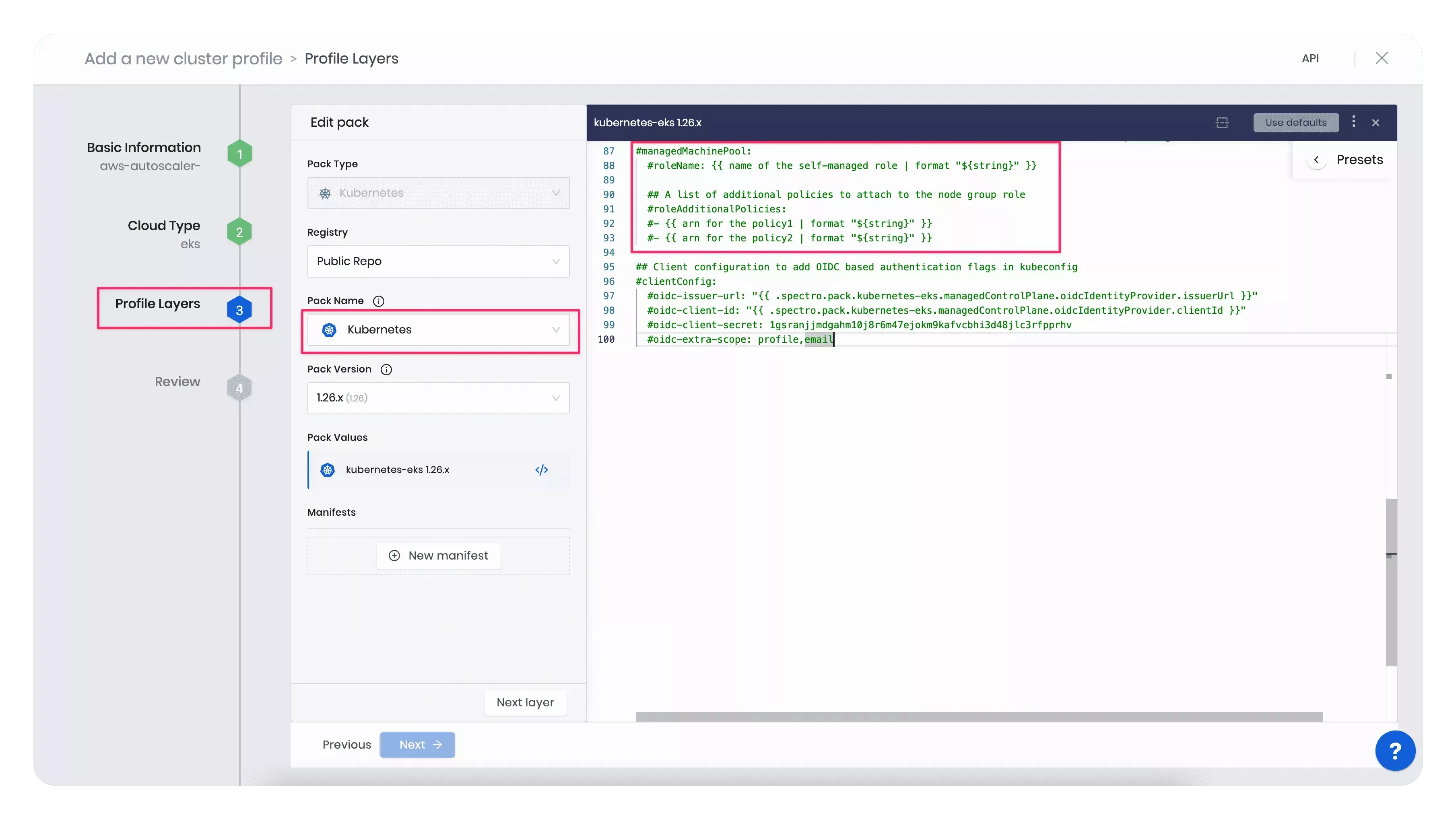

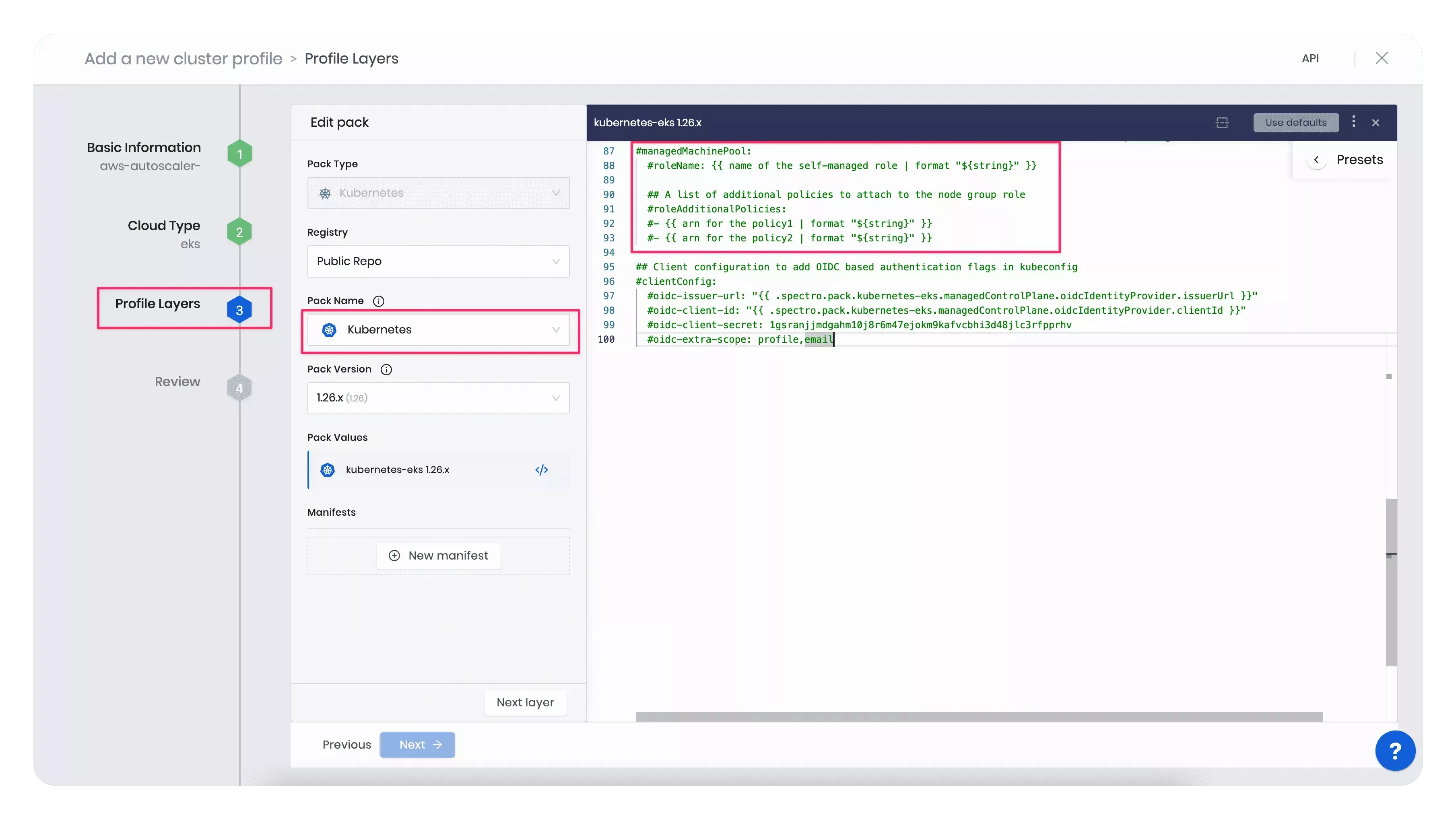

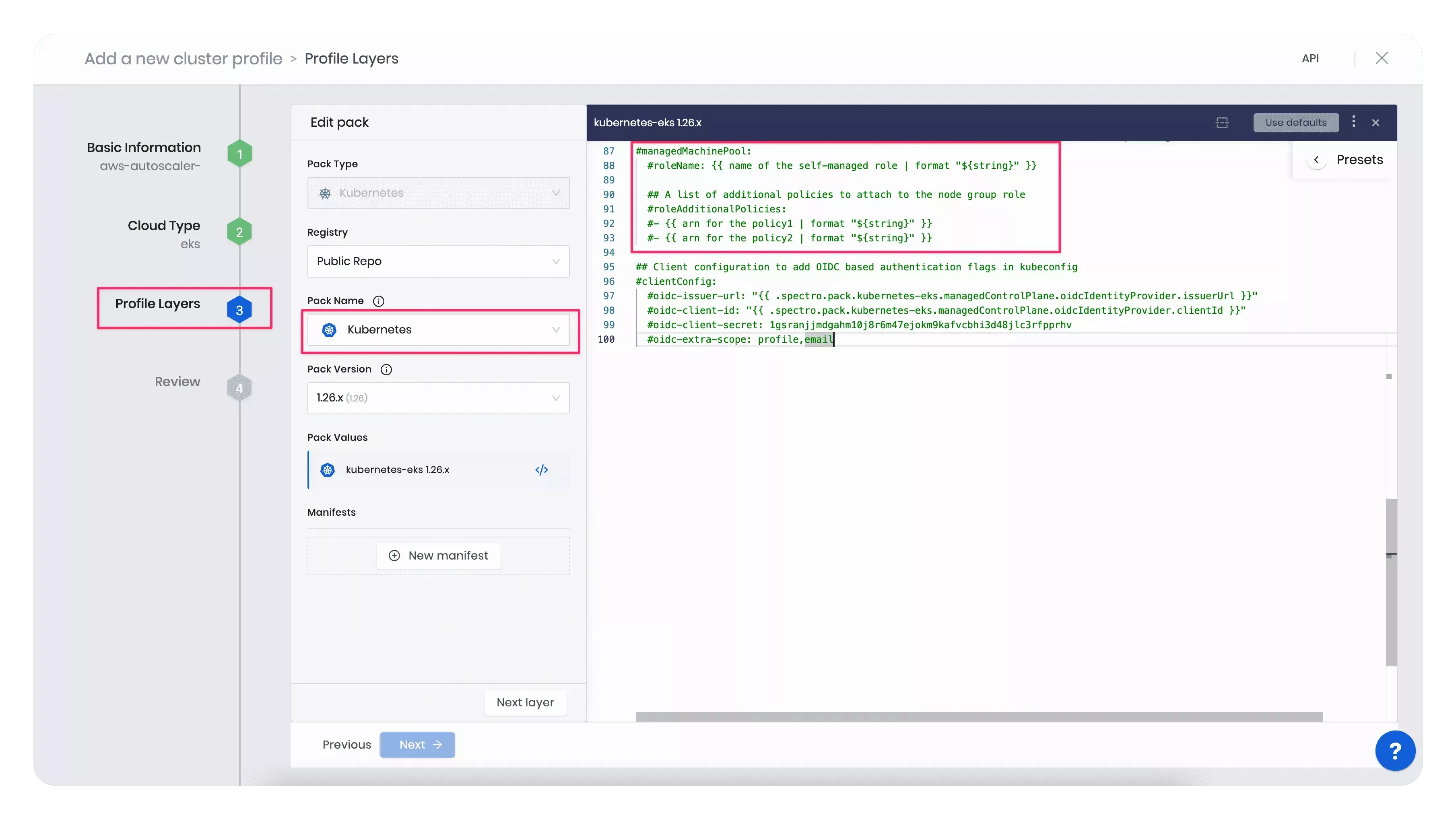

During the cluster profile creation, modify the

managedMachinePool.roleAdditionalPoliciessection in the values.yaml file of the Kubernetes pack with the created IAM policy ARN. Palette will attach the IAM policy to your cluster's node group during cluster deployment. The snapshot below illustrates the specific section to update with the policy ARN.

For example, the code block below displays the updated

managedMachinePool.roleAdditionalPoliciessection with a sample policy ARN.managedMachinePool:

# roleName: {{ name of the self-managed role | format "${string}" }}

# A list of additional policies to attach to the node group role

roleAdditionalPolicies:

- "arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler"tipInstead of updating the Kubernetes pack's values.yaml file, you can alternatively add an inline IAM policy to the cluster's node group post deployment. Refer to the Adding IAM identity permissions guide to learn how to embed an inline policy for a user or role.

-

Once you included all the infrastructure pack layers to your cluster profile, add the AWS Cluster Autoscaler pack.

warningThe values.yaml file of the Cluster Autoscaler pack includes a section for setting the minimum and maximum size of the autoscaling groups. However, this section should not be used, and this configuration must be done from the Palette UI, according to step 5 of this guide.

-

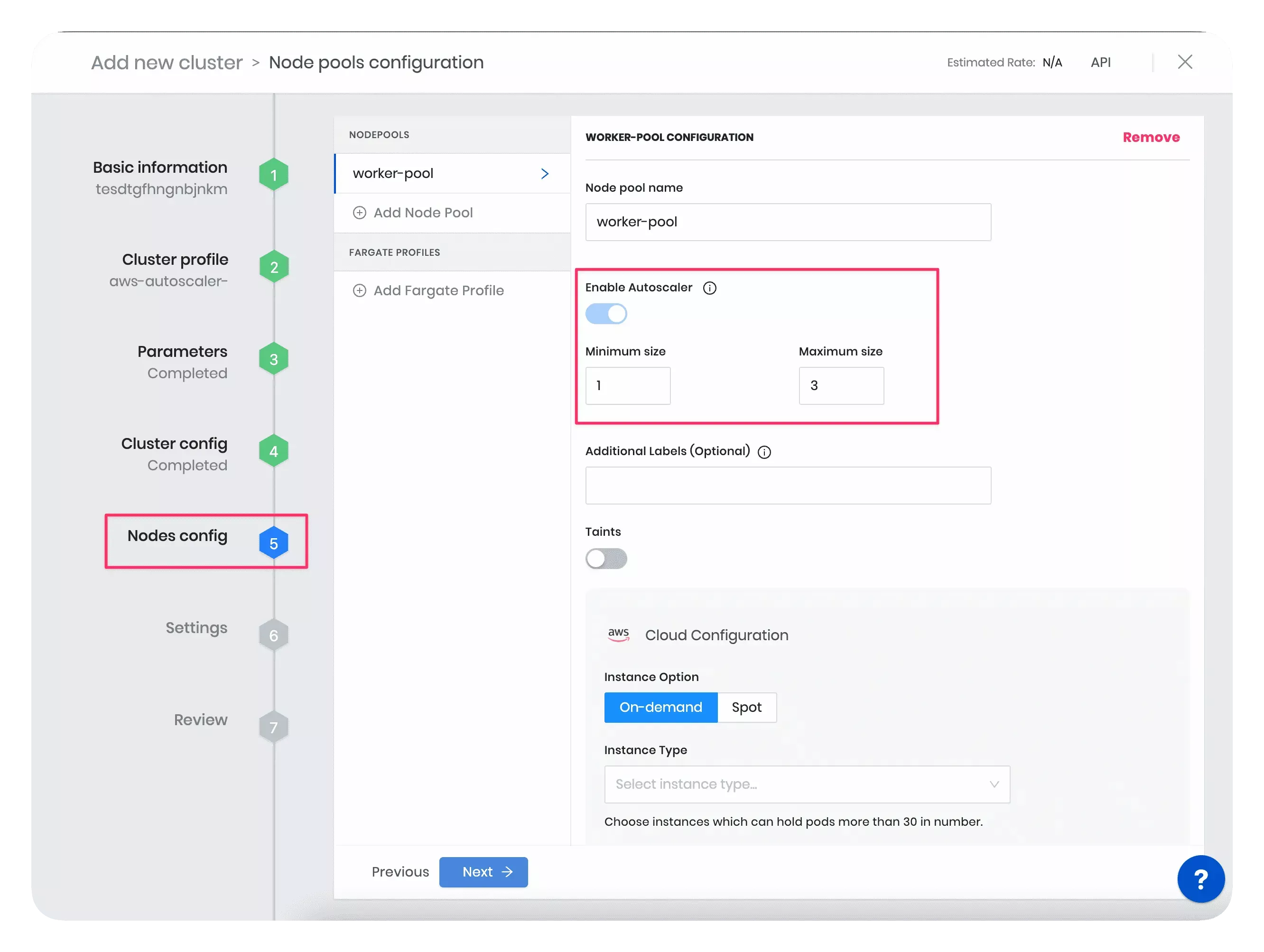

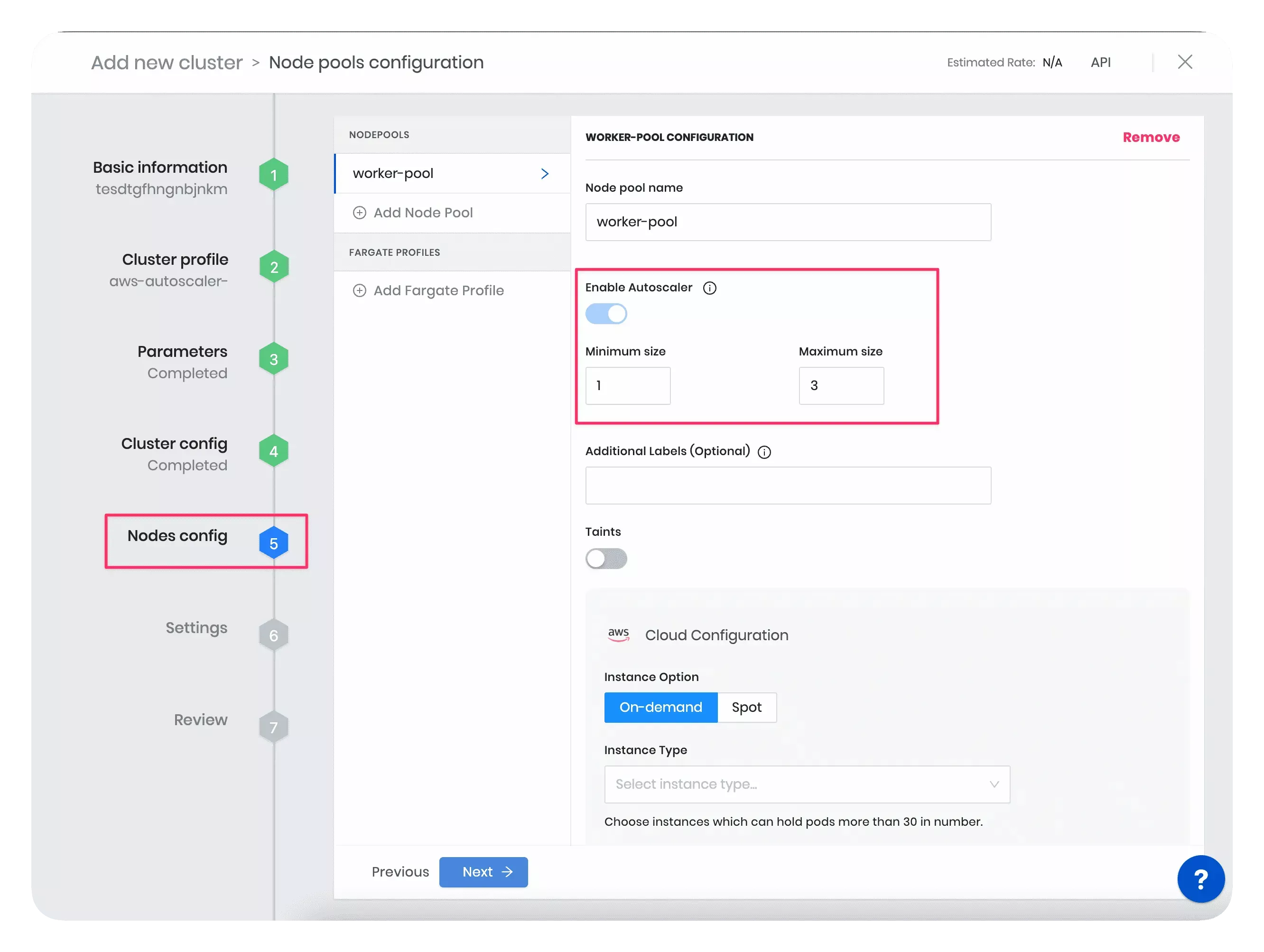

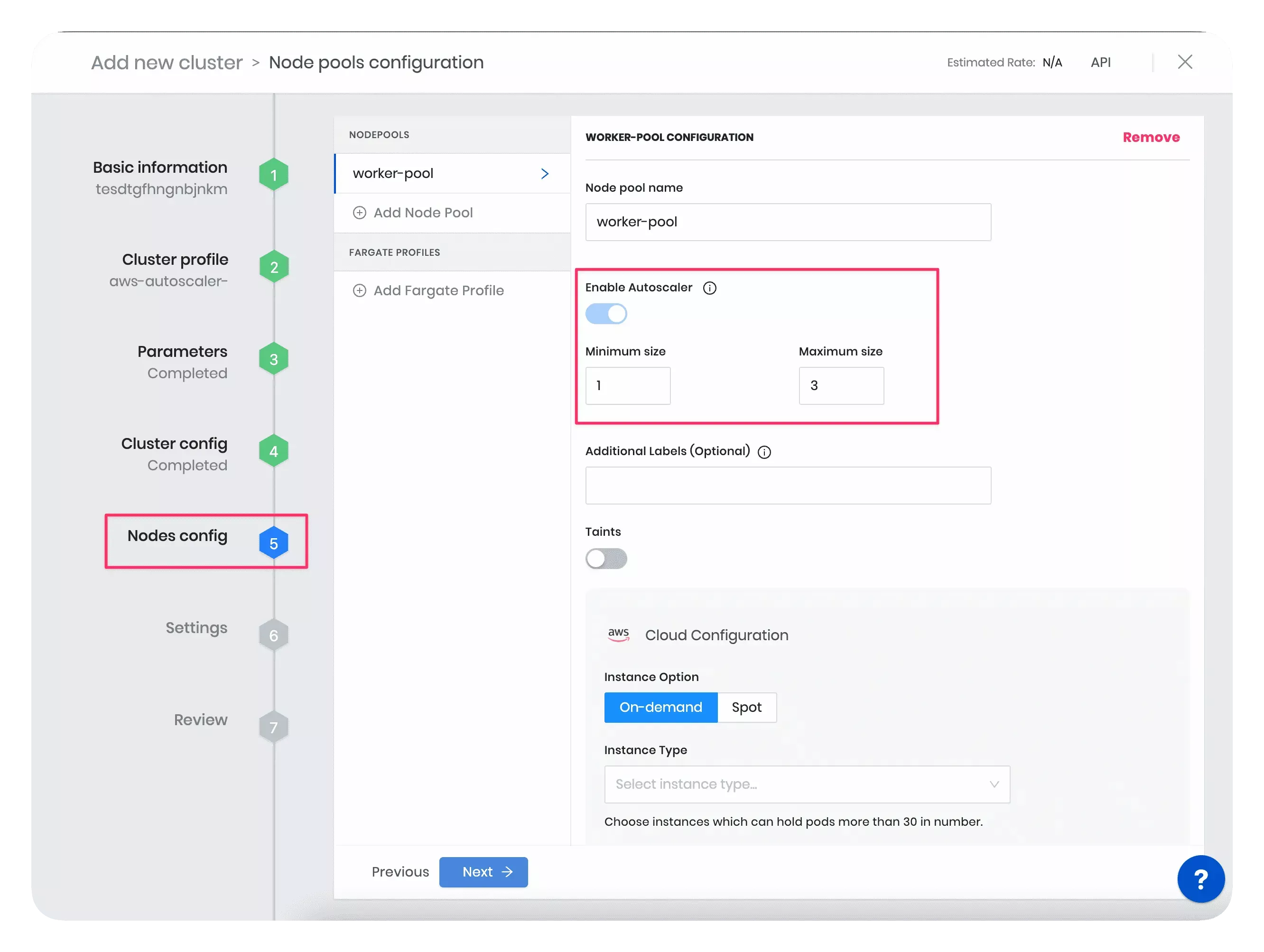

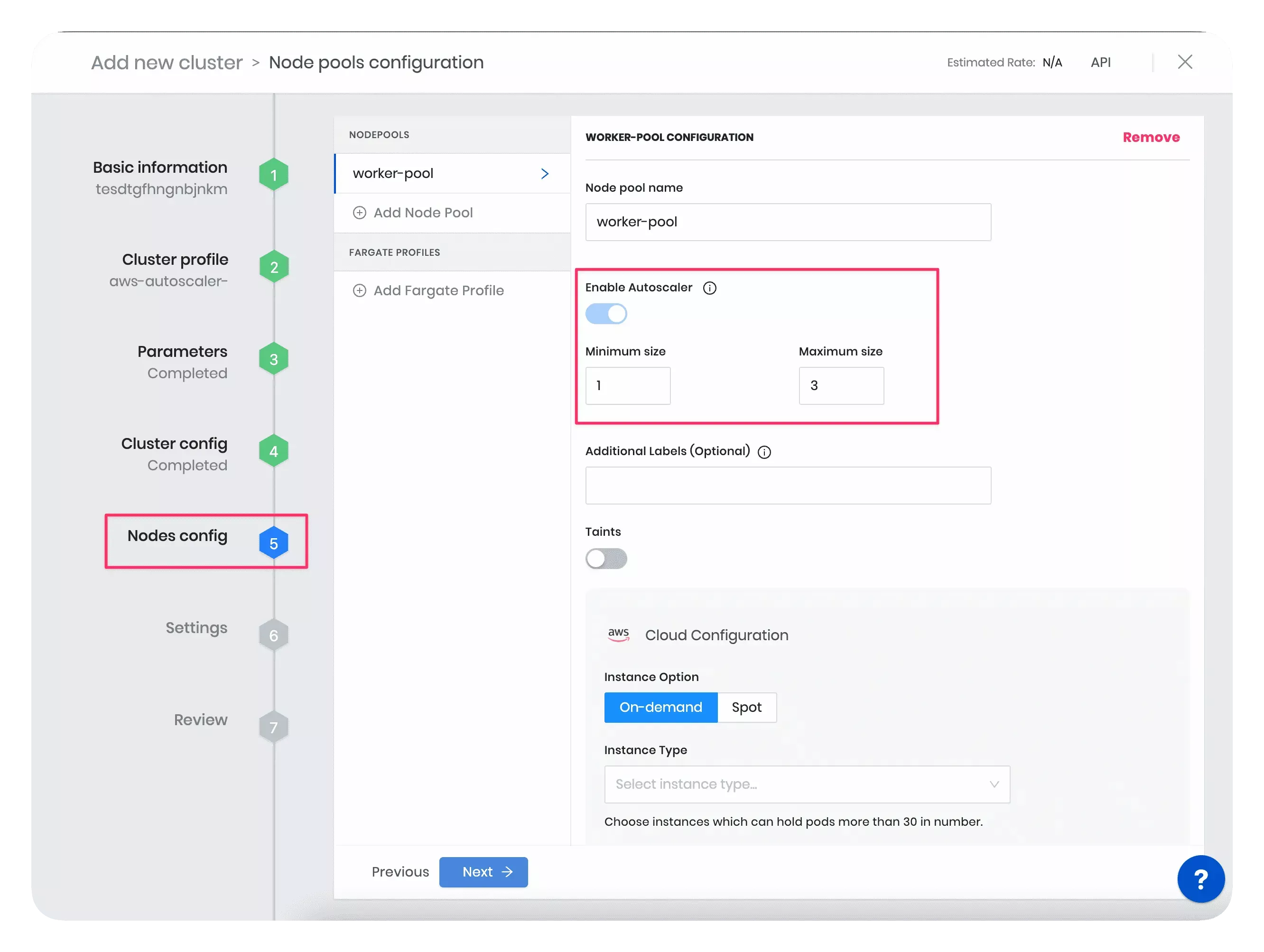

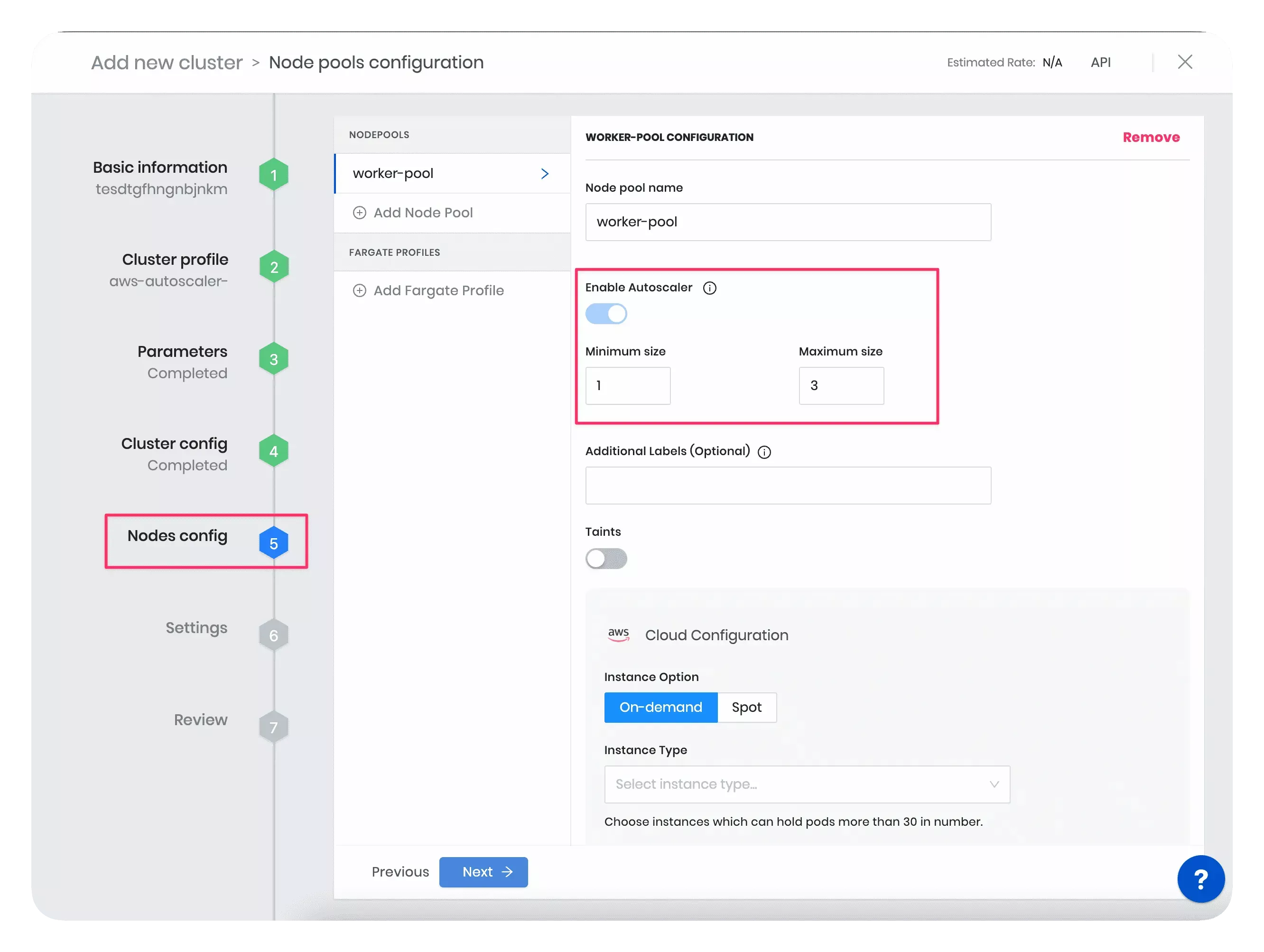

Next, use the created cluster profile to deploy a cluster. In the Nodes Config section, specify the minimum and maximum number of worker pool nodes and the instance type that suits your workload. Your worker pool will have at least the minimum number of nodes you set up, and when scaling up, it will not exceed the maximum number of nodes configured. Note that each configured node pool will represent one ASG.

The snapshot below displays an example of the cluster's Nodes Config section.

tip

tipYou can also edit the minimum and maximum number of worker pool nodes for a deployed cluster directly in the Palette UI.

Resize the Cluster

In this example, you will resize the worker pool nodes to better understand the scaling behavior of the Cluster Autoscaler pack. First, you will create a cluster with large-sized worker pool instances. Then, you will manually reduce the instance size. This will lead to insufficient resources for existing pods and multiple pod failures in the cluster. As a result, the Cluster Autoscaler will provision new smaller-sized nodes with enough capacity to accommodate the current workload and reschedule the affected pods on new nodes. Follow the steps below to trigger the Cluster Autoscaler and the pod rescheduling event.

-

During the cluster deployment, in the Nodes Config section, choose a large-sized instance type. For example, you can select the worker pool instance size as t3.2xlarge (8 vCPUs, 32 GB RAM) or higher.

-

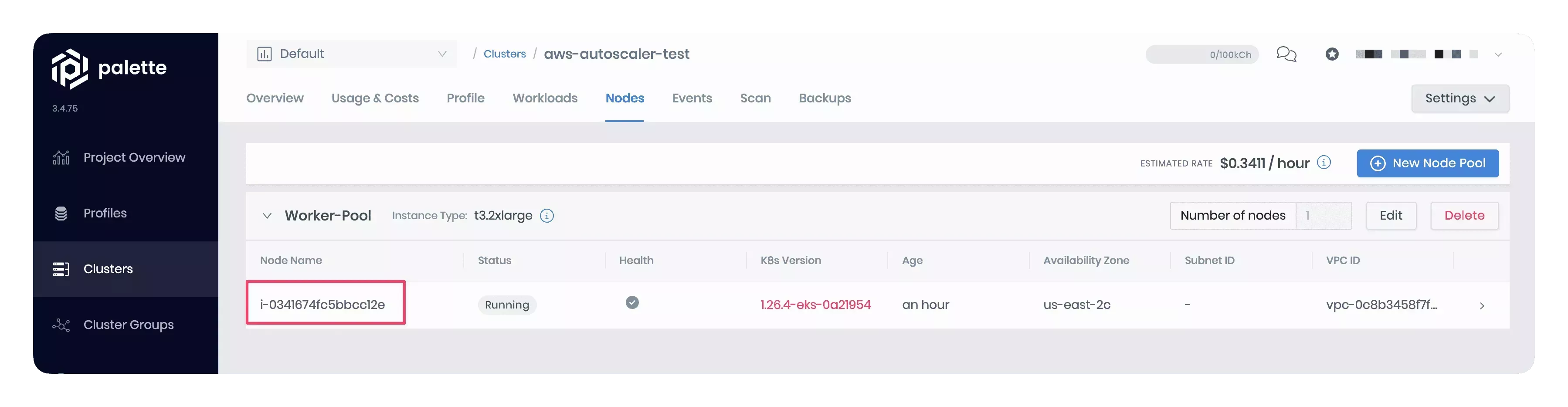

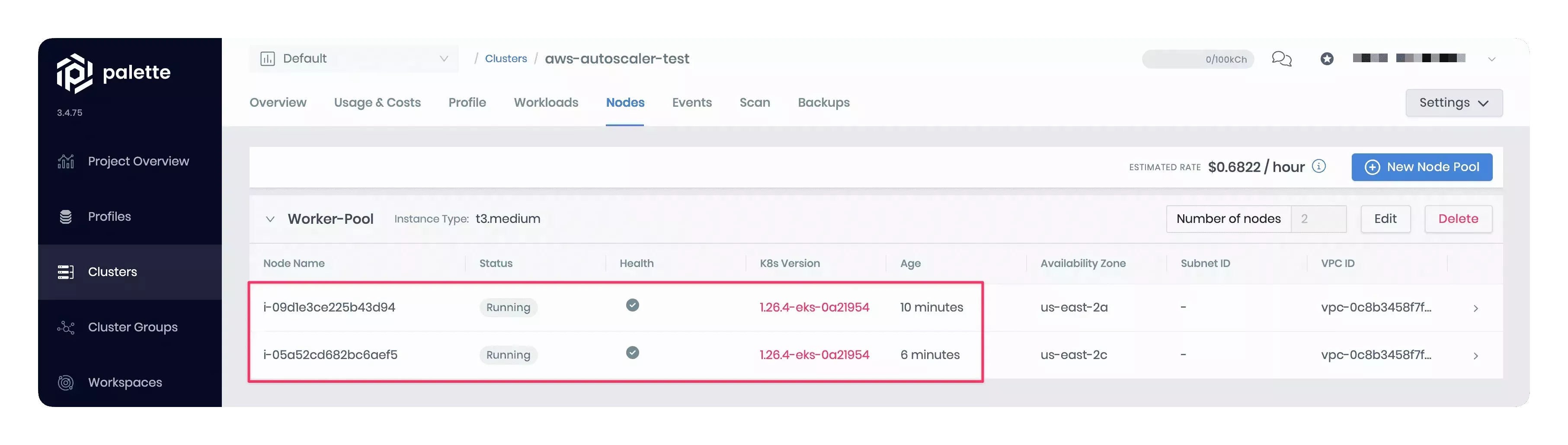

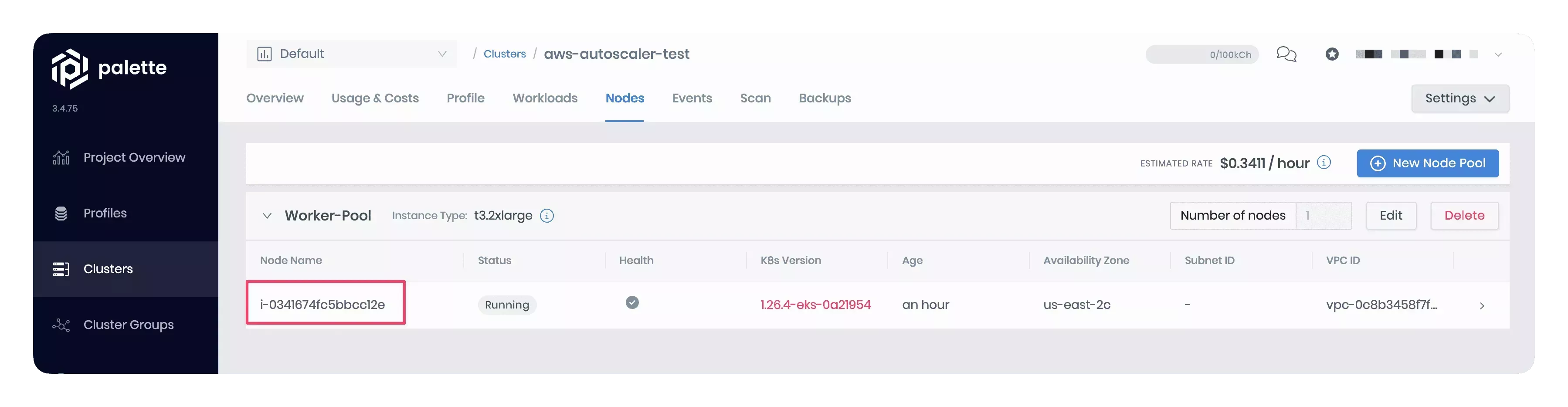

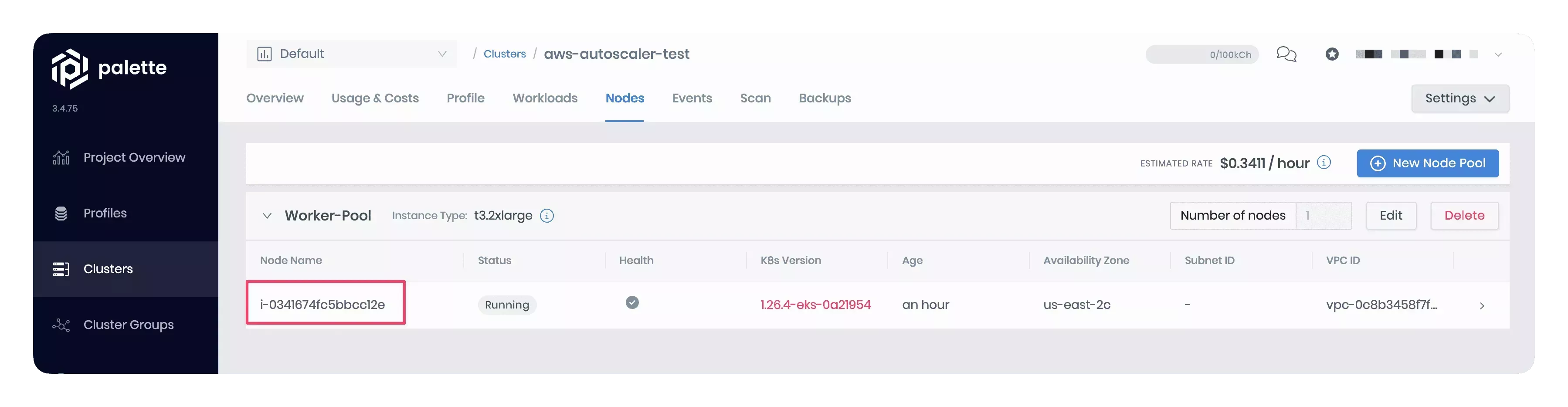

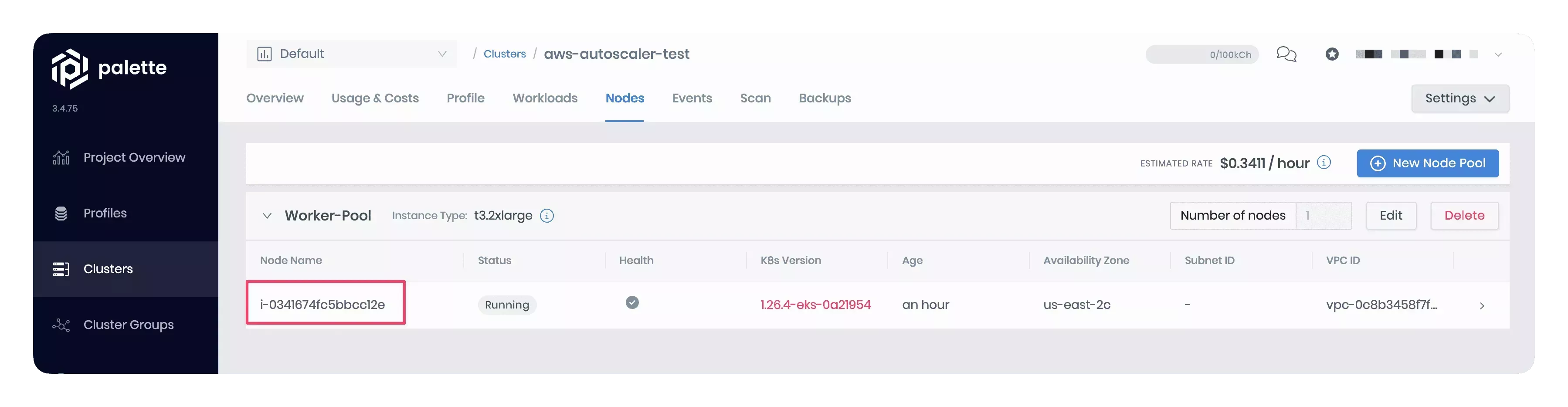

Once the cluster is deployed, go to the Nodes tab in the cluster details page in Palette. Observe the count and size of nodes. The snapshot below displays one node of the type t3.2xlarge in the cluster's worker pool.

-

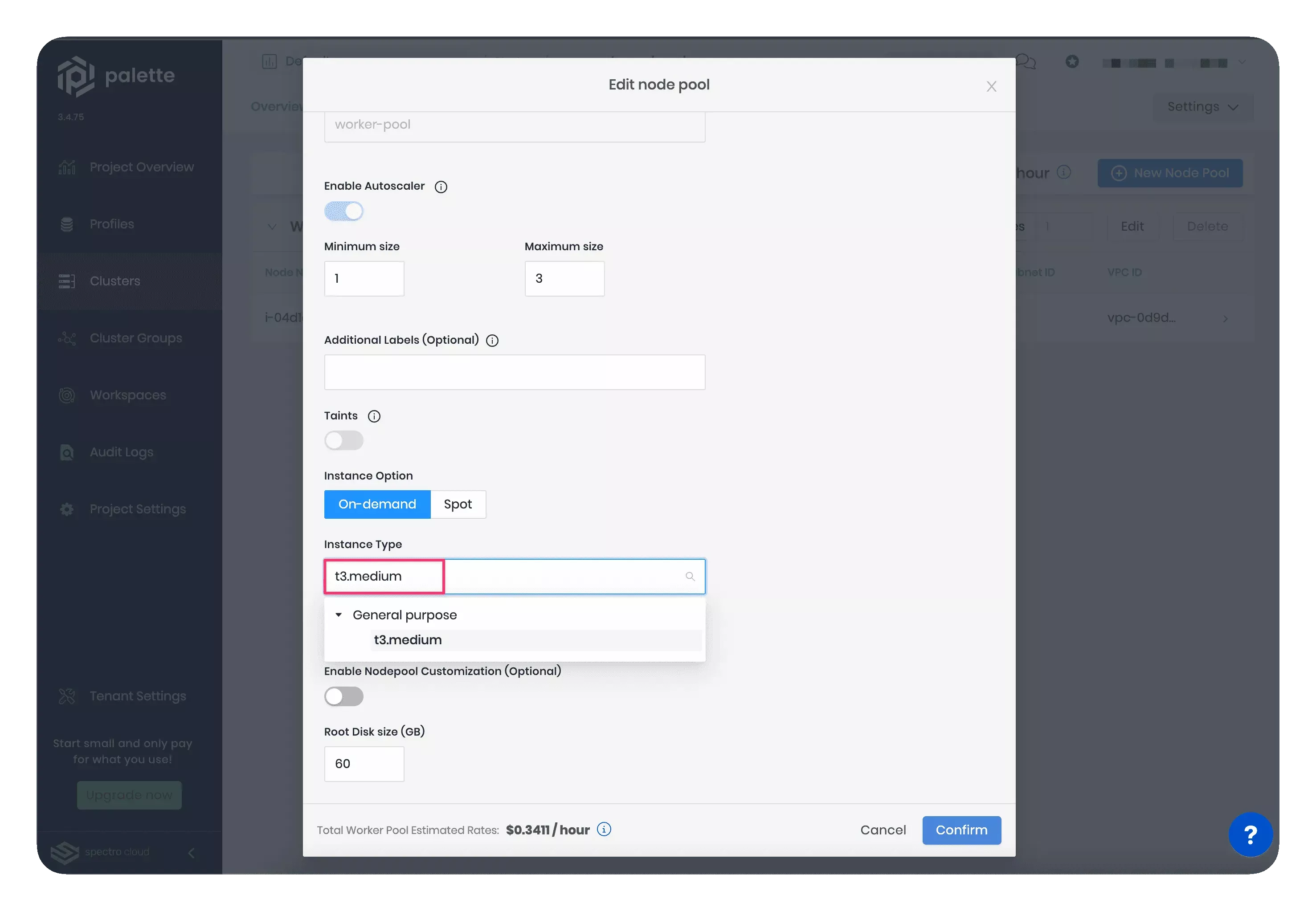

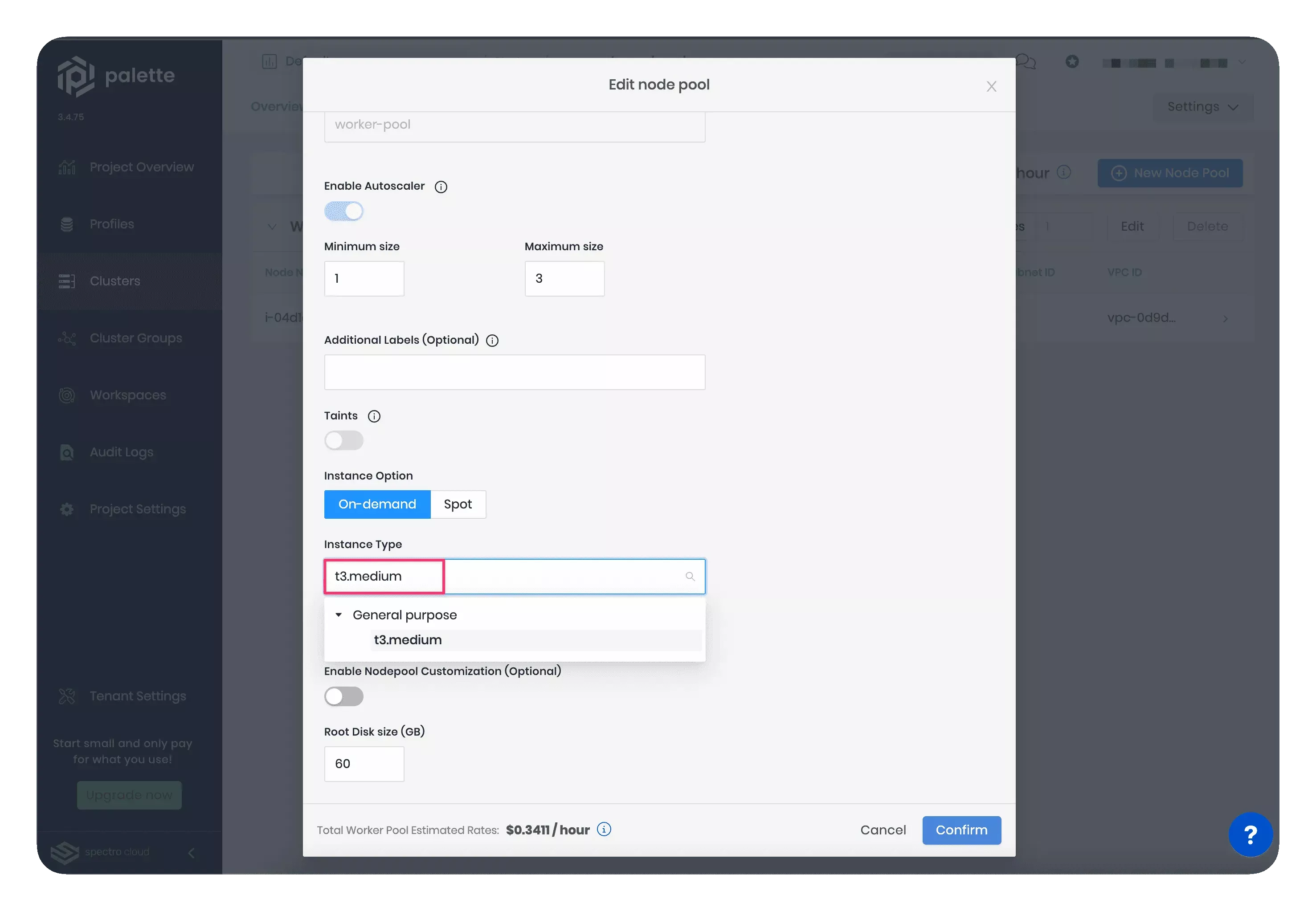

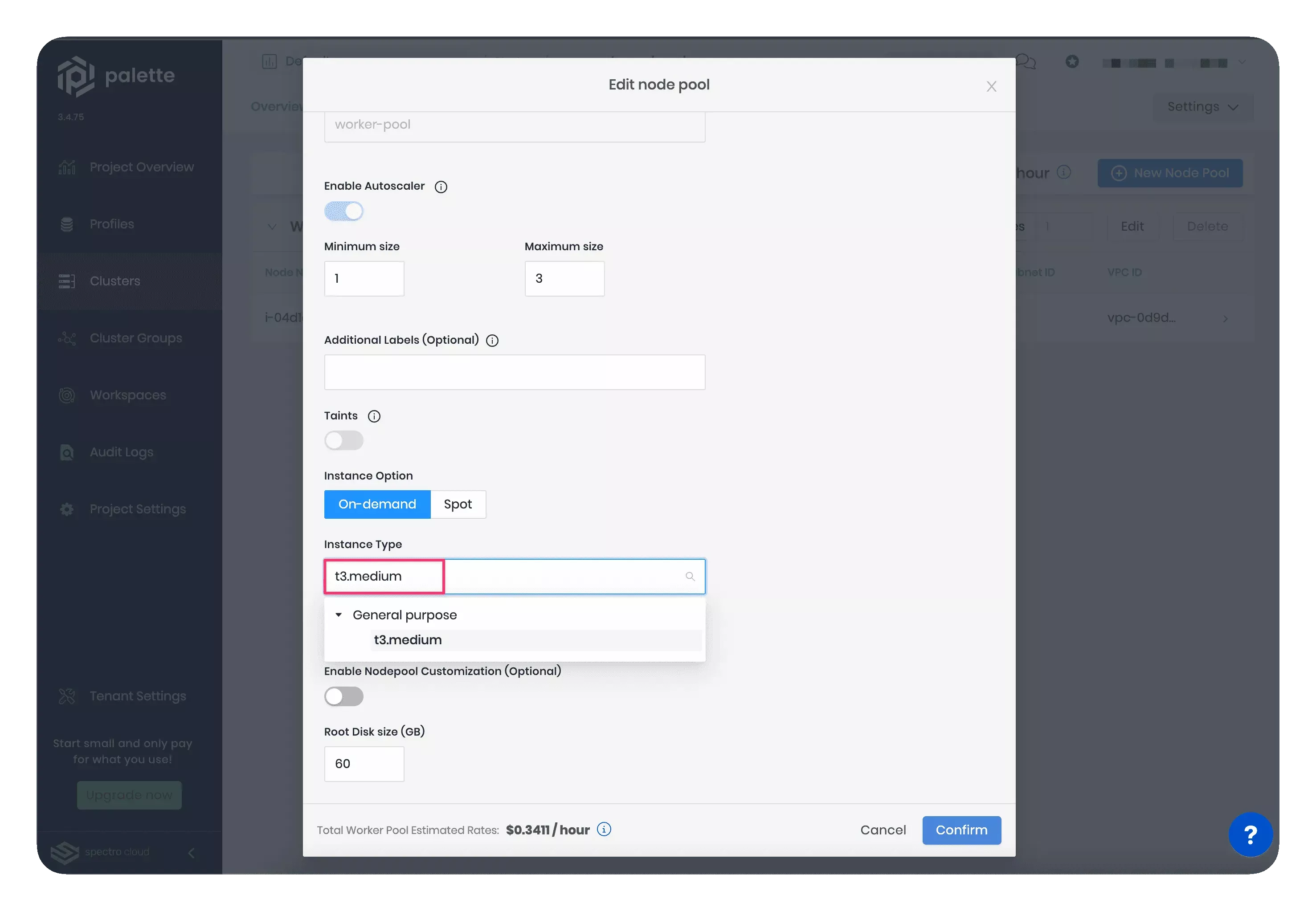

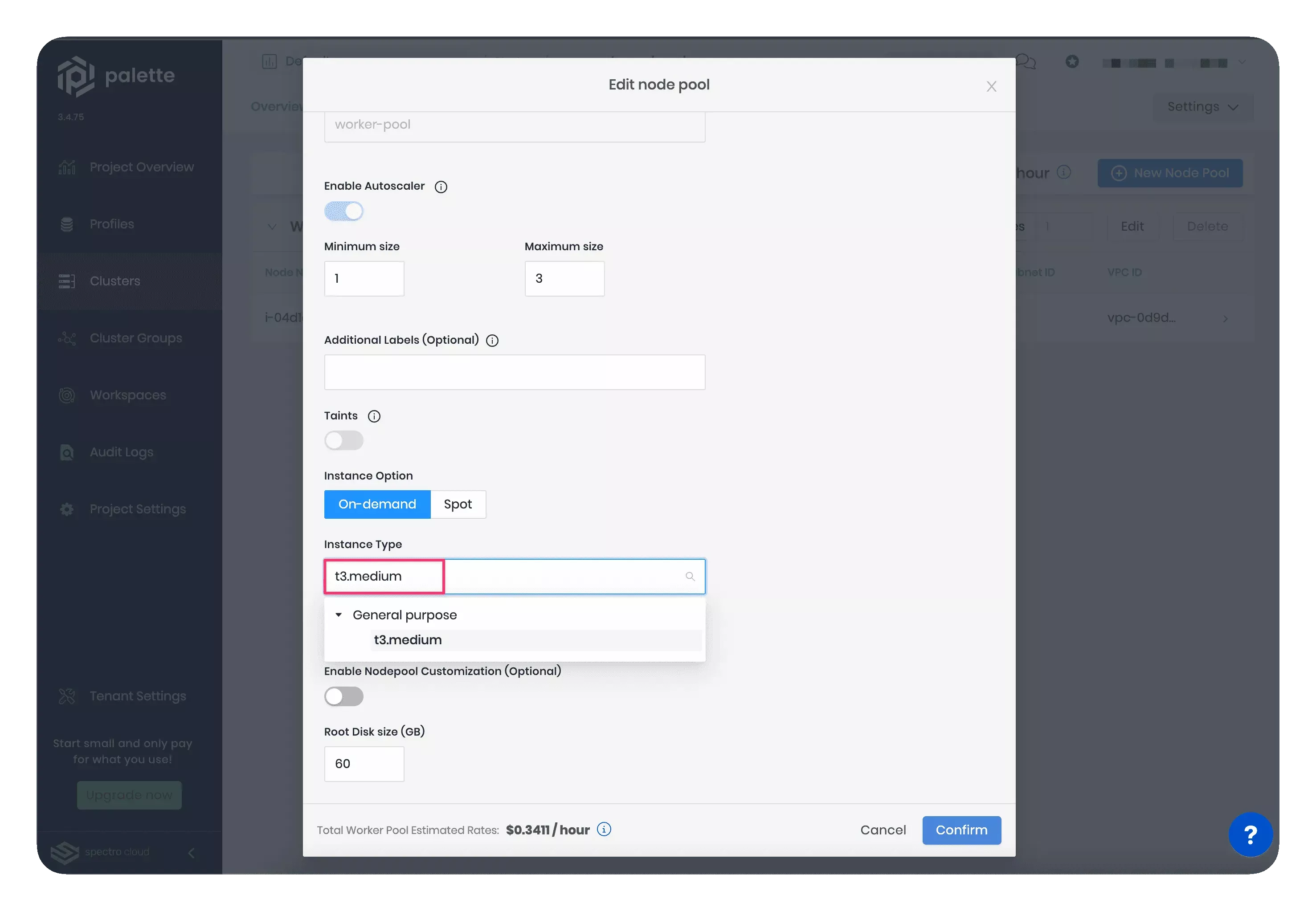

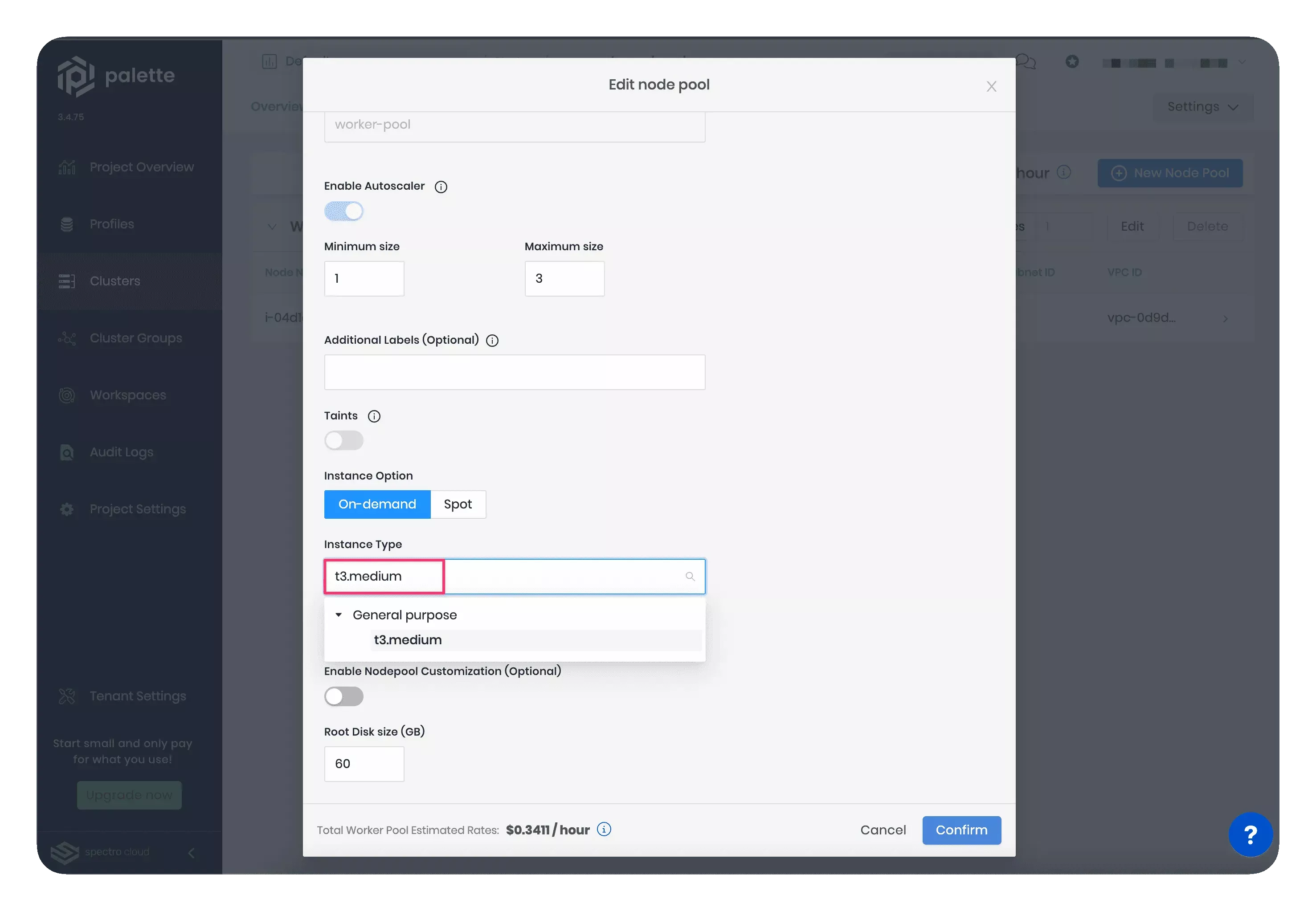

Manually reduce the instance size in the worker pool configuration to t3.medium (2 vCPUs, 8 GB RAM). The snapshot below shows how to change the instance size in the node pool configuration.

-

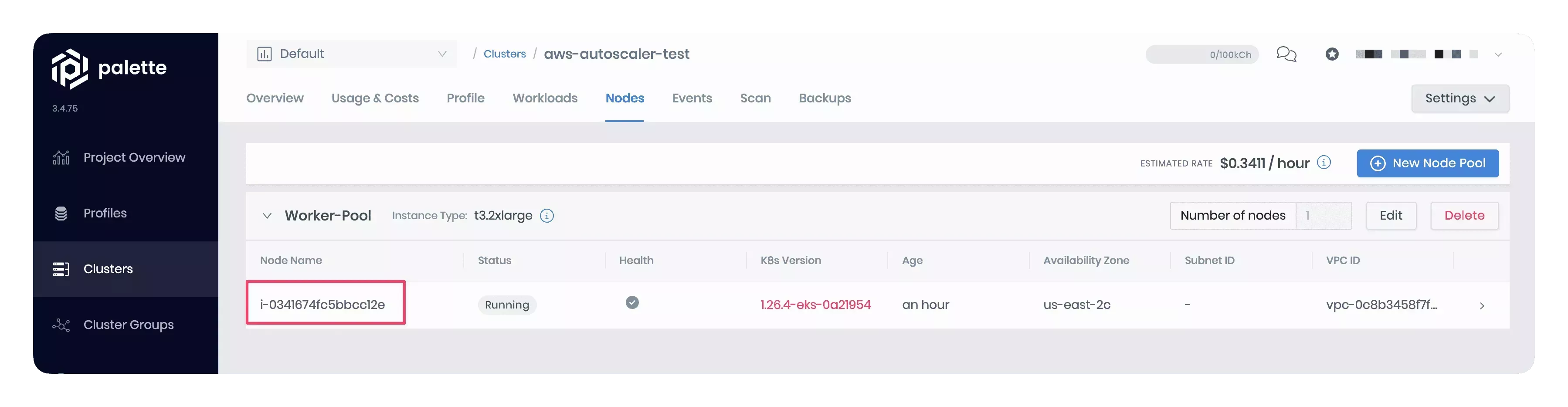

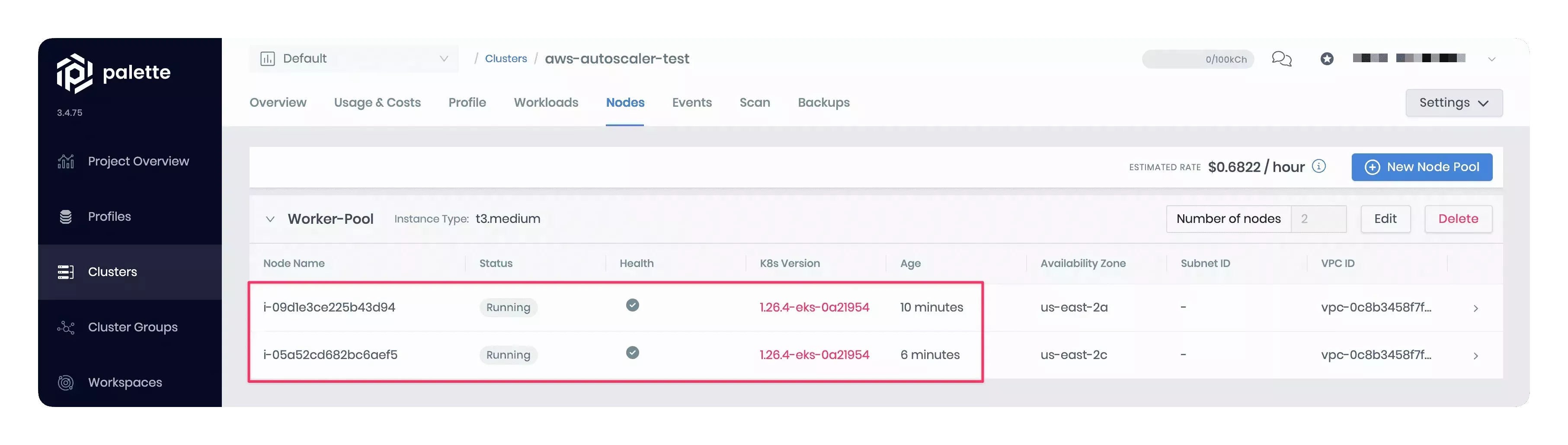

Wait a few minutes for the new nodes to be provisioned. Reducing the node size will make the Cluster Autoscaler shut down the large node and provision smaller-sized nodes with enough capacity to accommodate the current workload. Note that the new node count will be within the minimum and maximum limits specified in the worker pool configuration wizard.

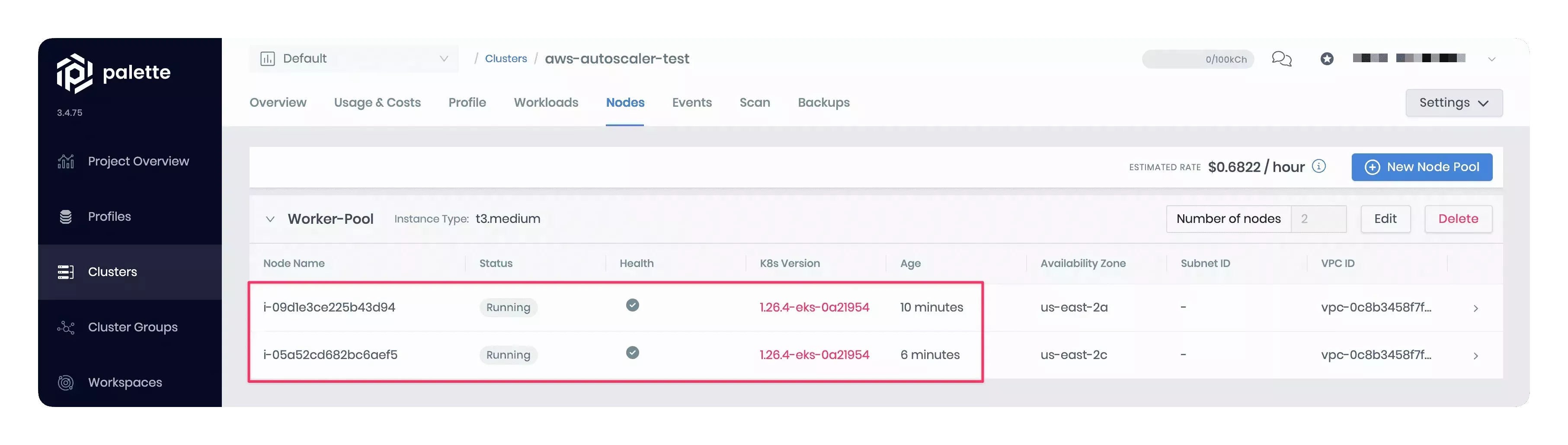

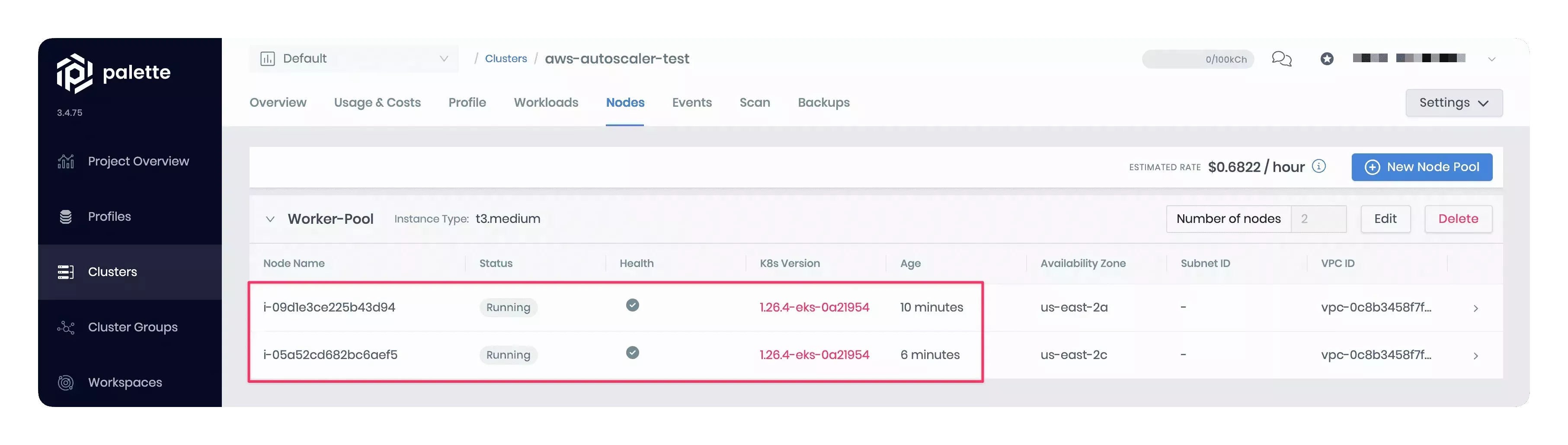

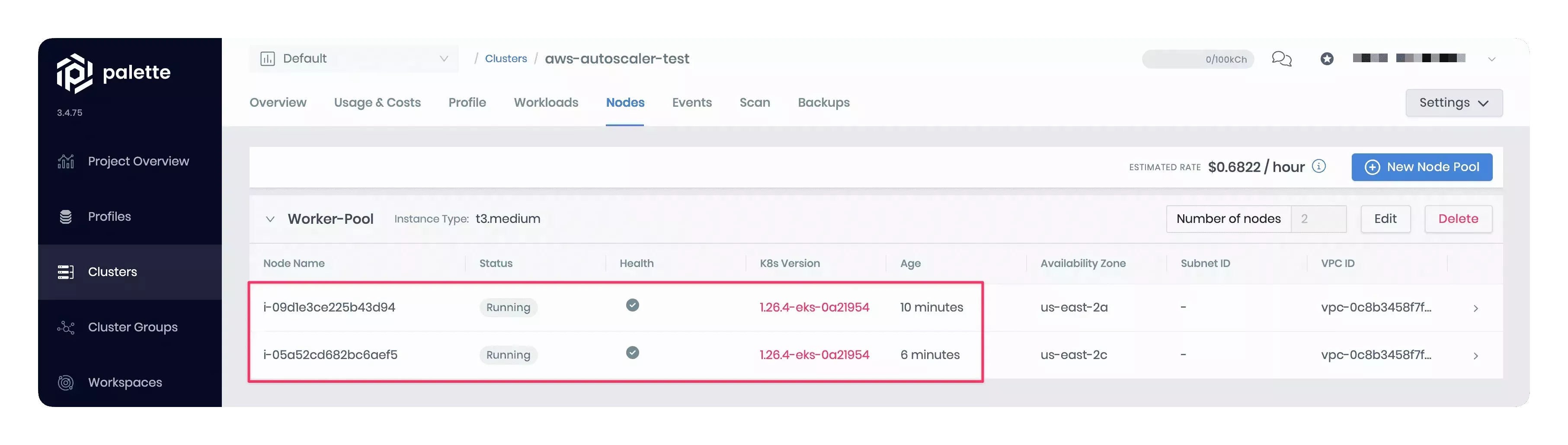

The snapshot below displays the newly created t3.medium nodes. These two smaller-sized nodes will efficiently manage the same workload as the single larger-sized node.

Prerequisites

-

An EKS host cluster with Kubernetes 1.28.x or higher.

-

Permission to create an IAM policy in the AWS account you use with Palette.

Parameters

| Parameter | Description | Default Value | Required |

|---|---|---|---|

manifests.aws-cluster-autoscaler.expander | Indicates which Auto Scaling Group (ASG) to expand. Options are random, most-pods, and least-waste. random scales up a random ASG. most-pods scales up the ASG that will schedule the most amount of pods. least-waste scales up the ASG that will waste the least amount of CPU/MEM resources. | least-waste | Yes |

Usage

The manifest-based Cluster Autoscaler pack is available for Amazon EKS host clusters. To deploy the pack, you must first define an IAM policy in the AWS account associated with Palette. This policy allows the Cluster Autoscaler to scale the cluster's node groups.

Use the following steps to create the IAM policy and deploy the Cluster Autoscaler pack.

-

In AWS, create a new IAM policy using the snippet below and give it a name, for example, PaletteEKSClusterAutoscaler. Refer to the Creating IAM policies guide for instructions.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeTags",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeImages",

"ec2:GetInstanceTypesFromInstanceRequirements",

"eks:DescribeNodegroup"

],

"Resource": ["*"]

}

]

} -

Copy the IAM policy Amazon Resource Name (ARN). Your policy ARN should be similar to

arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler. -

During the cluster profile creation, modify the

managedMachinePool.roleAdditionalPoliciessection in the values.yaml file of the Kubernetes pack with the created IAM policy ARN. Palette will attach the IAM policy to your cluster's node group during cluster deployment. The snapshot below illustrates the specific section to update with the policy ARN.

For example, the code block below displays the updated

managedMachinePool.roleAdditionalPoliciessection with a sample policy ARN.managedMachinePool:

# roleName: {{ name of the self-managed role | format "${string}" }}

# A list of additional policies to attach to the node group role

roleAdditionalPolicies:

- "arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler"tipInstead of updating the Kubernetes pack's values.yaml file, you can alternatively add an inline IAM policy to the cluster's node group post deployment. Refer to the Adding IAM identity permissions guide to learn how to embed an inline policy for a user or role.

-

Once you included all the infrastructure pack layers to your cluster profile, add the AWS Cluster Autoscaler pack.

-

Next, use the created cluster profile to deploy a cluster. In the Nodes Config section, specify the minimum and maximum number of worker pool nodes and the instance type that suits your workload. Your worker pool will have at least the minimum number of nodes you set up, and when scaling up, it will not exceed the maximum number of nodes configured. Note that each configured node pool will represent one ASG.

The snapshot below displays an example of the cluster's Nodes Config section.

tip

tipYou can also edit the minimum and maximum number of worker pool nodes for a deployed cluster directly in the Palette UI.

Resize the Cluster

In this example, you will resize the worker pool nodes to better understand the scaling behavior of the Cluster Autoscaler pack. First, you will create a cluster with large-sized worker pool instances. Then, you will manually reduce the instance size. This will lead to insufficient resources for existing pods and multiple pod failures in the cluster. As a result, the Cluster Autoscaler will provision new smaller-sized nodes with enough capacity to accommodate the current workload and reschedule the affected pods on new nodes. Follow the steps below to trigger the Cluster Autoscaler and the pod rescheduling event.

-

During the cluster deployment, in the Nodes Config section, choose a large-sized instance type. For example, you can select the worker pool instance size as t3.2xlarge (8 vCPUs, 32 GB RAM) or higher.

-

Once the cluster is deployed, go to the Nodes tab in the cluster details page in Palette. Observe the count and size of nodes. The snapshot below displays one node of the type t3.2xlarge in the cluster's worker pool.

-

Manually reduce the instance size in the worker pool configuration to t3.medium (2 vCPUs, 8 GB RAM). The snapshot below shows how to change the instance size in the node pool configuration.

-

Wait a few minutes for the new nodes to be provisioned. Reducing the node size will make the Cluster Autoscaler shut down the large node and provision smaller-sized nodes with enough capacity to accommodate the current workload. Note that the new node count will be within the minimum and maximum limits specified in the worker pool configuration wizard.

The snapshot below displays the newly created t3.medium nodes. These two smaller-sized nodes will efficiently manage the same workload as the single larger-sized node.

Prerequisites

-

An EKS host cluster with Kubernetes 1.27.x or higher.

-

Permission to create an IAM policy in the AWS account you use with Palette.

Parameters

| Parameter | Description | Default Value | Required |

|---|---|---|---|

manifests.aws-cluster-autoscaler.expander | Indicates which Auto Scaling Group (ASG) to expand. Options are random, most-pods, and least-waste. random scales up a random ASG. most-pods scales up the ASG that will schedule the most amount of pods. least-waste scales up the ASG that will waste the least amount of CPU/MEM resources. | least-waste | Yes |

Usage

The manifest-based Cluster Autoscaler pack is available for Amazon EKS host clusters. To deploy the pack, you must first define an IAM policy in the AWS account associated with Palette. This policy allows the Cluster Autoscaler to scale the cluster's node groups.

Use the following steps to create the IAM policy and deploy the Cluster Autoscaler pack.

-

In AWS, create a new IAM policy using the snippet below and give it a name, for example, PaletteEKSClusterAutoscaler. Refer to the Creating IAM policies guide for instructions.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeTags",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeImages",

"ec2:GetInstanceTypesFromInstanceRequirements",

"eks:DescribeNodegroup"

],

"Resource": ["*"]

}

]

} -

Copy the IAM policy Amazon Resource Name (ARN). Your policy ARN should be similar to

arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler. -

During the cluster profile creation, modify the

managedMachinePool.roleAdditionalPoliciessection in the values.yaml file of the Kubernetes pack with the created IAM policy ARN. Palette will attach the IAM policy to your cluster's node group during cluster deployment. The snapshot below illustrates the specific section to update with the policy ARN.

For example, the code block below displays the updated

managedMachinePool.roleAdditionalPoliciessection with a sample policy ARN.managedMachinePool:

# roleName: {{ name of the self-managed role | format "${string}" }}

# A list of additional policies to attach to the node group role

roleAdditionalPolicies:

- "arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler"tipInstead of updating the Kubernetes pack's values.yaml file, you can alternatively add an inline IAM policy to the cluster's node group post deployment. Refer to the Adding IAM identity permissions guide to learn how to embed an inline policy for a user or role.

-

Once you included all the infrastructure pack layers to your cluster profile, add the AWS Cluster Autoscaler pack.

-

Next, use the created cluster profile to deploy a cluster. In the Nodes Config section, specify the minimum and maximum number of worker pool nodes and the instance type that suits your workload. Your worker pool will have at least the minimum number of nodes you set up, and when scaling up, it will not exceed the maximum number of nodes configured. Note that each configured node pool will represent one ASG.

The snapshot below displays an example of the cluster's Nodes Config section.

tip

tipYou can also edit the minimum and maximum number of worker pool nodes for a deployed cluster directly in the Palette UI.

Resize the Cluster

In this example, you will resize the worker pool nodes to better understand the scaling behavior of the Cluster Autoscaler pack. First, you will create a cluster with large-sized worker pool instances. Then, you will manually reduce the instance size. This will lead to insufficient resources for existing pods and multiple pod failures in the cluster. As a result, the Cluster Autoscaler will provision new smaller-sized nodes with enough capacity to accommodate the current workload and reschedule the affected pods on new nodes. Follow the steps below to trigger the Cluster Autoscaler and the pod rescheduling event.

-

During the cluster deployment, in the Nodes Config section, choose a large-sized instance type. For example, you can select the worker pool instance size as t3.2xlarge (8 vCPUs, 32 GB RAM) or higher.

-

Once the cluster is deployed, go to the Nodes tab in the cluster details page in Palette. Observe the count and size of nodes. The snapshot below displays one node of the type t3.2xlarge in the cluster's worker pool.

-

Manually reduce the instance size in the worker pool configuration to t3.medium (2 vCPUs, 8 GB RAM). The snapshot below shows how to change the instance size in the node pool configuration.

-

Wait a few minutes for the new nodes to be provisioned. Reducing the node size will make the Cluster Autoscaler shut down the large node and provision smaller-sized nodes with enough capacity to accommodate the current workload. Note that the new node count will be within the minimum and maximum limits specified in the worker pool configuration wizard.

The snapshot below displays the newly created t3.medium nodes. These two smaller-sized nodes will efficiently manage the same workload as the single larger-sized node.

Prerequisites

-

An EKS host cluster with Kubernetes 1.26.x or higher.

-

Permission to create an IAM policy in the AWS account you use with Palette.

Parameters

| Parameter | Description | Default Value | Required |

|---|---|---|---|

manifests.aws-cluster-autoscaler.expander | Indicates which Auto Scaling Group (ASG) to expand. Options are random, most-pods, and least-waste. random scales up a random ASG. most-pods scales up the ASG that will schedule the most amount of pods. least-waste scales up the ASG that will waste the least amount of CPU/MEM resources. | least-waste | Yes |

Usage

The manifest-based Cluster Autoscaler pack is available for Amazon EKS host clusters. To deploy the pack, you must first define an IAM policy in the AWS account associated with Palette. This policy allows the Cluster Autoscaler to scale the cluster's node groups.

Use the following steps to create the IAM policy and deploy the Cluster Autoscaler pack.

-

In AWS, create a new IAM policy using the snippet below and give it a name, for example, PaletteEKSClusterAutoscaler. Refer to the Creating IAM policies guide for instructions.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeTags",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeImages",

"ec2:GetInstanceTypesFromInstanceRequirements",

"eks:DescribeNodegroup"

],

"Resource": ["*"]

}

]

} -

Copy the IAM policy Amazon Resource Name (ARN). Your policy ARN should be similar to

arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler. -

During the cluster profile creation, modify the

managedMachinePool.roleAdditionalPoliciessection in the values.yaml file of the Kubernetes pack with the created IAM policy ARN. Palette will attach the IAM policy to your cluster's node group during cluster deployment. The snapshot below illustrates the specific section to update with the policy ARN.

For example, the code block below displays the updated

managedMachinePool.roleAdditionalPoliciessection with a sample policy ARN.managedMachinePool:

# roleName: {{ name of the self-managed role | format "${string}" }}

# A list of additional policies to attach to the node group role

roleAdditionalPolicies:

- "arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler"tipInstead of updating the Kubernetes pack's values.yaml file, you can alternatively add an inline IAM policy to the cluster's node group post deployment. Refer to the Adding IAM identity permissions guide to learn how to embed an inline policy for a user or role.

-

Once you included all the infrastructure pack layers to your cluster profile, add the AWS Cluster Autoscaler pack.

-

Next, use the created cluster profile to deploy a cluster. In the Nodes Config section, specify the minimum and maximum number of worker pool nodes and the instance type that suits your workload. Your worker pool will have at least the minimum number of nodes you set up, and when scaling up, it will not exceed the maximum number of nodes configured. Note that each configured node pool will represent one ASG.

The snapshot below displays an example of the cluster's Nodes Config section.

tip

tipYou can also edit the minimum and maximum number of worker pool nodes for a deployed cluster directly in the Palette UI.

Resize the Cluster

In this example, you will resize the worker pool nodes to better understand the scaling behavior of the Cluster Autoscaler pack. First, you will create a cluster with large-sized worker pool instances. Then, you will manually reduce the instance size. This will lead to insufficient resources for existing pods and multiple pod failures in the cluster. As a result, the Cluster Autoscaler will provision new smaller-sized nodes with enough capacity to accommodate the current workload and reschedule the affected pods on new nodes. Follow the steps below to trigger the Cluster Autoscaler and the pod rescheduling event.

-

During the cluster deployment, in the Nodes Config section, choose a large-sized instance type. For example, you can select the worker pool instance size as t3.2xlarge (8 vCPUs, 32 GB RAM) or higher.

-

Once the cluster is deployed, go to the Nodes tab in the cluster details page in Palette. Observe the count and size of nodes. The snapshot below displays one node of the type t3.2xlarge in the cluster's worker pool.

-

Manually reduce the instance size in the worker pool configuration to t3.medium (2 vCPUs, 8 GB RAM). The snapshot below shows how to change the instance size in the node pool configuration.

-

Wait a few minutes for the new nodes to be provisioned. Reducing the node size will make the Cluster Autoscaler shut down the large node and provision smaller-sized nodes with enough capacity to accommodate the current workload. Note that the new node count will be within the minimum and maximum limits specified in the worker pool configuration wizard.

The snapshot below displays the newly created t3.medium nodes. These two smaller-sized nodes will efficiently manage the same workload as the single larger-sized node.

Prerequisites

-

An EKS host cluster with Kubernetes 1.19.x or higher.

-

Permission to create an IAM policy in the AWS account you use with Palette.

Parameters

| Parameter | Description | Default Value | Required |

|---|---|---|---|

manifests.aws-cluster-autoscaler.expander | Indicates which Auto Scaling Group (ASG) to expand. Options are random, most-pods, and least-waste. random scales up a random ASG. most-pods scales up the ASG that will schedule the most amount of pods. least-waste scales up the ASG that will waste the least amount of CPU/MEM resources. | least-waste | Yes |

Usage

The manifest-based Cluster Autoscaler pack is available for Amazon EKS host clusters. To deploy the pack, you must first define an IAM policy in the AWS account associated with Palette. This policy allows the Cluster Autoscaler to scale the cluster's node groups.

Use the following steps to create the IAM policy and deploy the Cluster Autoscaler pack.

-

In AWS, create a new IAM policy using the snippet below and give it a name, for example, PaletteEKSClusterAutoscaler. Refer to the Creating IAM policies guide for instructions.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeTags",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeImages",

"ec2:GetInstanceTypesFromInstanceRequirements",

"eks:DescribeNodegroup"

],

"Resource": ["*"]

}

]

} -

Copy the IAM policy Amazon Resource Name (ARN). Your policy ARN should be similar to

arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler. -

During the cluster profile creation, modify the

managedMachinePool.roleAdditionalPoliciessection in the values.yaml file of the Kubernetes pack with the created IAM policy ARN. Palette will attach the IAM policy to your cluster's node group during cluster deployment. The snapshot below illustrates the specific section to update with the policy ARN.

For example, the code block below displays the updated

managedMachinePool.roleAdditionalPoliciessection with a sample policy ARN.managedMachinePool:

# roleName: {{ name of the self-managed role | format "${string}" }}

# A list of additional policies to attach to the node group role

roleAdditionalPolicies:

- "arn:aws:iam::123456789:policy/PaletteEKSClusterAutoscaler"tipInstead of updating the Kubernetes pack's values.yaml file, you can alternatively add an inline IAM policy to the cluster's node group post deployment. Refer to the Adding IAM identity permissions guide to learn how to embed an inline policy for a user or role.

-

Once you included all the infrastructure pack layers to your cluster profile, add the AWS Cluster Autoscaler pack.

-

Next, use the created cluster profile to deploy a cluster. In the Nodes Config section, specify the minimum and maximum number of worker pool nodes and the instance type that suits your workload. Your worker pool will have at least the minimum number of nodes you set up, and when scaling up, it will not exceed the maximum number of nodes configured. Note that each configured node pool will represent one ASG.

The snapshot below displays an example of the cluster's Nodes Config section.

tip

tipYou can also edit the minimum and maximum number of worker pool nodes for a deployed cluster directly in the Palette UI.

Resize the Cluster

In this example, you will resize the worker pool nodes to better understand the scaling behavior of the Cluster Autoscaler pack. First, you will create a cluster with large-sized worker pool instances. Then, you will manually reduce the instance size. This will lead to insufficient resources for existing pods and multiple pod failures in the cluster. As a result, the Cluster Autoscaler will provision new smaller-sized nodes with enough capacity to accommodate the current workload and reschedule the affected pods on new nodes. Follow the steps below to trigger the Cluster Autoscaler and the pod rescheduling event.

-

During the cluster deployment, in the Nodes Config section, choose a large-sized instance type. For example, you can select the worker pool instance size as t3.2xlarge (8 vCPUs, 32 GB RAM) or higher.

-

Once the cluster is deployed, go to the Nodes tab in the cluster details page in Palette. Observe the count and size of nodes. The snapshot below displays one node of the type t3.2xlarge in the cluster's worker pool.

-

Manually reduce the instance size in the worker pool configuration to t3.medium (2 vCPUs, 8 GB RAM). The snapshot below shows how to change the instance size in the node pool configuration.

-

Wait a few minutes for the new nodes to be provisioned. Reducing the node size will make the Cluster Autoscaler shut down the large node and provision smaller-sized nodes with enough capacity to accommodate the current workload. Note that the new node count will be within the minimum and maximum limits specified in the worker pool configuration wizard.

The snapshot below displays the newly created t3.medium nodes. These two smaller-sized nodes will efficiently manage the same workload as the single larger-sized node.

All versions less than version 1.22.x are considered deprecated. Upgrade to a newer version to take advantage of new features.

Troubleshooting

Scenario - Quota Limits Exceeded

You may observe the LimitExceeded: Cannot exceed quota for PoliciesPerRole:10 error in the cluster deployment logs.

This may happen because the default IAM role that Palette creates for the node group already has 10 policies attached,

and you are trying to attach one more. By default, your AWS account will have a quota of 10 managed policies per IAM

role. The workaround is to follow the instructions in the

IAM object quotas

guide to request a quota increase.

Scenario - IAM Authenticator not Found

You may encounter an executable aws-iam-authenticator not found error in your terminal when attempting to access your

EKS cluster from your local machine. This may happen due to the

aws-iam-authenticator plugin missing from your local

environment. The workaround is to install the IAM Authenticator. Refer to the

IAM Authenticator install guide

for more information.

Terraform

You can reference the AWS Cluster Autoscaler pack in Terraform with a data resource.

data "spectrocloud_registry" "public_registry" {

name = "Public Repo"

}

data "spectrocloud_pack_simple" "aws-cluster-autoscaler" {

name = "aws-cluster-autoscaler"

version = "1.29.2"

type = "helm"

registry_uid = data.spectrocloud_registry.public_registry.id

}